Ismail Khalfaoui Hassani

I’m a PhD student at the University of Toulouse, France. I am advised by Timothée Masquelier and Thomas Pellegrini.

Currently in my final year, my Ph.D. was supported by the Artificial and Natural Intelligence Toulouse Institue (ANITI).

My research interests are:

- Artificial Intelligence.

- Deep Learning.

- Differential optimization.

- Computer Vision.

- Audio and Speech recognition.

- Spiking Neural Networks.

Research

Dilated Convolution with Learnable Spacings

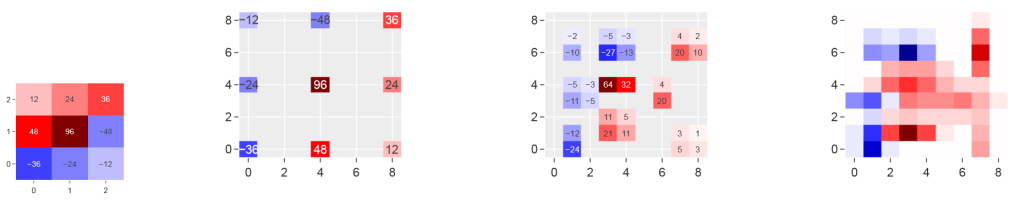

Dilated Convolution with Learnable Spacings (abbreviated to DCLS) is a novel convolution method based on gradient descent and interpolation. In DCLS, the positions of the weights within the convolutional kernel are learned in a gradient-based manner, and the inherent problem of non-differentiability due to the integer nature of the positions in the kernel is solved by taking advantage of an interpolation method.

DCLS: beyond bilinear interpolation

DCLS is a recently proposed variation of the dilated convolution in which the spacings between the non-zero elements in the kernel, or equivalently their positions, are learnable. The original DCLS used bilinear interpolation, and thus only considered the four nearest pixels. Yet here we show that longer range interpolations, and in particular a Gaussian interpolation, allow improving performance on ImageNet1k classification on two state-of-the-art convolutional architectures (ConvNeXt and ConvFormer), without increasing the number of parameters.

Learning Delays in Spiking Neural Networks using DCLS

Here, we propose a new discrete-time algorithm that learns delays in deep feedforward SNNs using backpropagation, in an offline manner. To simulate delays between consecutive layers, we use 1D convolutions across time. The kernels contain only a few non-zero weights – one per synapse – whose positions correspond to the delays. These positions are learned together with the weights using the recently proposed Dilated Convolution with Learnable Spacings (DCLS).

Audio classification with DCLS

DCLS is a recent convolution method in which the positions of the kernel elements are learned throughout training by backpropagation. Its interest has recently been demonstrated in computer vision (ImageNet classification and downstream tasks). Here we show that DCLS is also useful for audio tagging using the AudioSet classification benchmark.